Agenta - Prompt Management, Evaluation, and Observability for LLM Apps Introduction

Agenta is an innovative open-source platform designed specifically for building powerful Large Language Model (LLM) applications. It furnishes developers with essential tools for prompt engineering, evaluation, debugging, and monitoring, ultimately improving the reliability and performance of complex LLM applications. In an era where AI advancements are accelerating, Agenta serves as a single source of truth, helping teams collaborate efficiently while managing the unpredictable nature of LLMs.

Agenta - Prompt Management, Evaluation, and Observability for LLM Apps Features

Centralized Workflow

Agenta helps streamline your workflow by centralizing prompts, evaluations, and traces on a single platform. No more scattered prompts across various tools like Slack, Google Sheets, or emails.

Collaborative Environment

With Agenta, product managers, developers, and domain experts can work together seamlessly. The platform fosters collaboration, allowing for the creation, experimentation, and iteration of prompts.

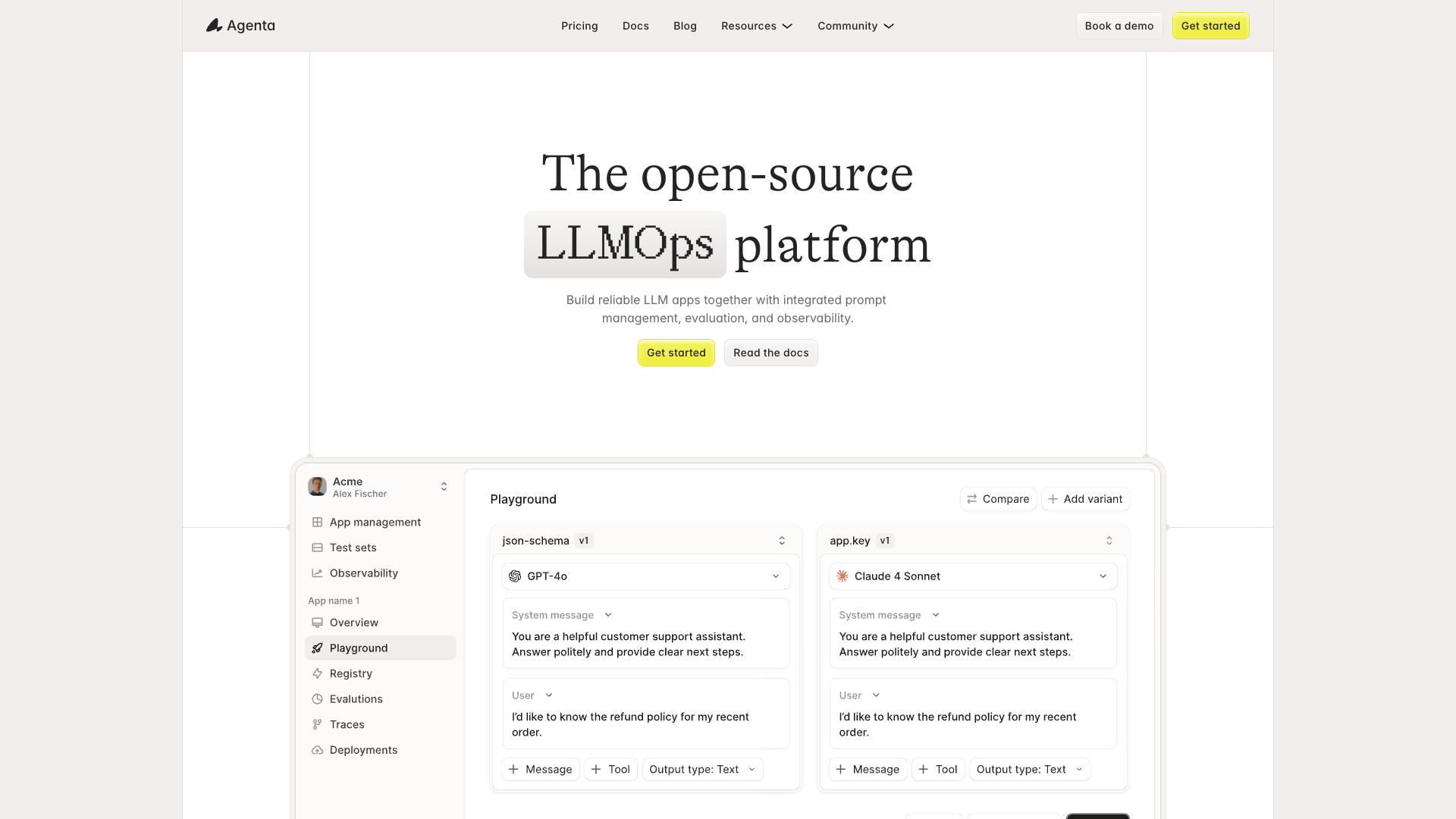

Unified Playground for Comparisons

Utilize Agenta''s unified playground to compare prompts and models side-by-side. This feature also includes complete version history, which tracks changes made to prompts effectively.

Model Agnostic Solutions

Choose the best model from any provider without facing vendor lock-in. Agenta empowers you to integrate various models effortlessly.

Automated Evaluation System

Replace guesswork with evidence through systematic processes. Agenta allows you to run experiments, track results, and validate changes, enhancing the overall evaluation process.

Comprehensive Debugging and Observation

Debug AI systems effectively with extensive trace capabilities. Annotate traces collaboratively with your team or gather feedback from users, ensuring precise identification of failure points.

Performance Monitoring

Agenta enables real-time monitoring of performance and quick regression detection via live, online evaluations.

User-Friendly Interface

Empower domain experts to experiment safely with prompts without the need for coding. Product managers and experts can also run evaluations and compare experiments directly from a user-friendly interface.

Agenta - Prompt Management, Evaluation, and Observability for LLM Apps Frequently Asked Questions

How does Agenta enhance collaboration among team members?

Agenta bridges the gap between product managers, developers, and domain experts by providing a unified platform for prompt management and evaluation, fostering better communication and teamwork.

Is Agenta a vendor-locked platform?

No, Agenta is model agnostic, meaning you can utilize the best models from any provider, giving you the freedom to choose what works best for your applications.

Can I integrate Agenta with my existing tools?

Yes, Agenta seamlessly integrates with tools such as LangChain, LlamaIndex, and OpenAI, among others, ensuring you can incorporate it into your existing tech stack without hassle.

Is Agenta suitable for teams new to LLM development?

Absolutely! Agenta is designed to help teams of all experience levels build and manage LLM applications efficiently, providing comprehensive documentation and community support.

How do I get started with Agenta?

You can begin by exploring our documentation, joining our community on Slack, or booking a demo to see how Agenta can transform your LLM development process.